Luminance-Contrast-Aware Foveated Rendering

Siggraph 2019

1MPI Informatik, Germany 2Saarland University, MMCI, Germany 3West Pomeranian University of Technology, Poland 4Università della Svizzera italiana, Switzerland

Paper Bibtex Github Repo (Prediction Model) Source code (application) Figure 7 errata

Errata In Figure 7, the data is presented in pixels instead of visual degrees as units, despite the horizontal axis label. The plot with correct units is available for download here.

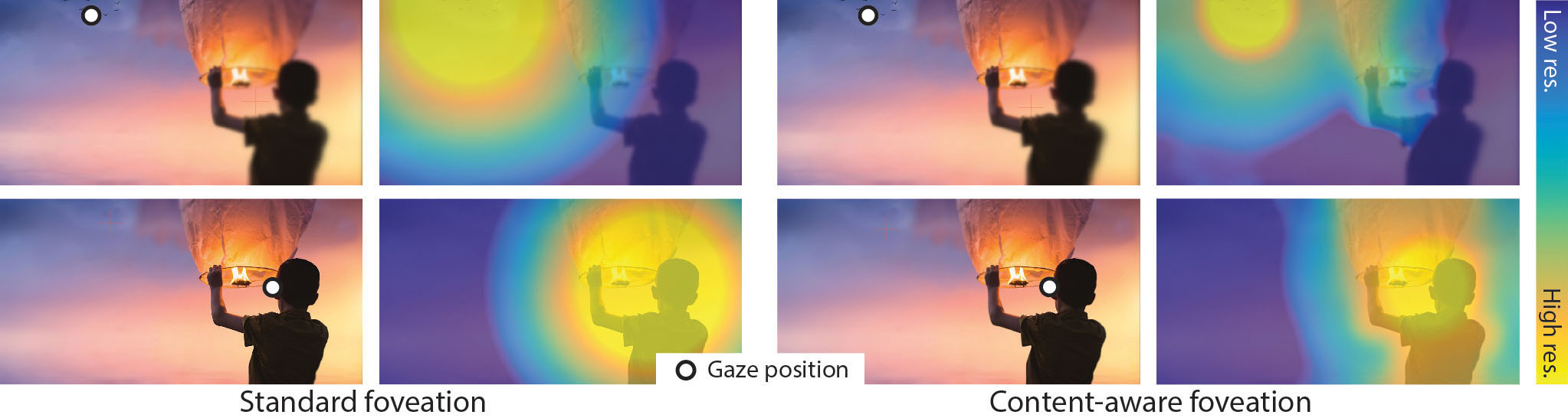

Abstract Current rendering techniques struggle to fulfill quality and power efficiency requirements imposed by new display devices such as virtual reality headsets. A promising solution to overcome these problems is foveated rendering, which exploits gaze information to reduce rendering quality for the peripheral vision where the requirements of the human visual system are significantly lower. Most of the current solutions model the sensitivity as a function of eccentricity, neglecting the fact that it also is strongly influenced by the displayed content. In this work, we propose a new luminance-contrast-aware foveated rendering technique which demonstrates that the computational savings of foveated rendering can be significantly improved if local luminance contrast of the image is analyzed. To this end, we first study the resolution requirements at different eccentricities as a function of luminance patterns. We later use this information to derive a low-cost predictor of the foveated rendering parameters. Its main feature is the ability to predict the parameters using only a low-resolution version of the current frame, even though the prediction holds for high-resolution rendering. This property is essential for the estimation of required quality before the full-resolution image is rendered. We demonstrate that our predictor can efficiently drive the foveated rendering technique and analyze its benefits in a series of user experiments.

Acknowledgements This project was supported by the Fraunhofer and Max Planck cooperation program within the German Pact for Research and Innovation (PFI), ERC Starting Grant (PERDY-804226), European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 642841 (DISTRO) and Polish National Science Centre (decision number DEC-2013/09/B/ST6/02270). The perceptual experiments conducted in this project are approved by the Ethical Review Board of Saarland University (No. 18-11-4).